Overview

In this article we will talk about applications of Hierarchical Neural Networks, essentially reasoning about code changes by finding embeddings or vector representation of code changes. This is based on a recent paper called “CC2Vec: Distributed Representations of Code Changes” by Hoang et al. 2020

Machine Learning on Code (#MLonCode community) is recently seeing a lot of research and is very emerging, especially with the availability of what is known as “Big Code” as huge data set stored across various version control systems (VCS).

We can learn from past bug fixing commits and representing code change as a vector is a great step towards achieving this goal in a more effective manner.

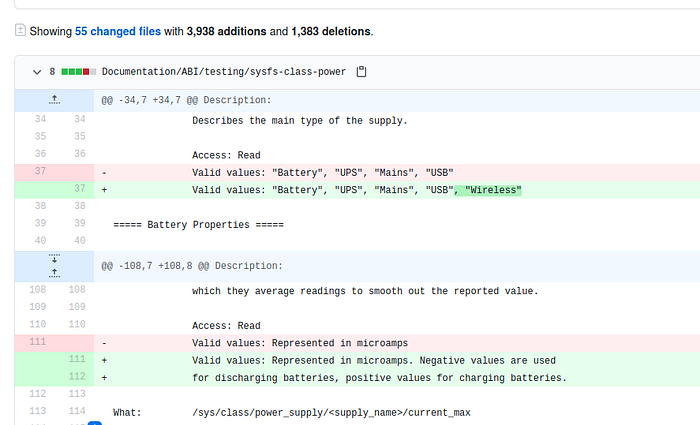

How Does a Code Change Look Like?

Well, a code change essentially consists of a commit message, usually affecting multiple files with lines being removed and added.

A code change follows a Hierarchical Structure. Each code change has a commit message, usually, it affects multiple files at a time with few lines being removed and few lines being added (Each such pair known as a code hunk). Also, these added/removed lines have tokens (keywords, identifiers, etc.).

Overview of CC2Vec

Once we have distributed representation of code, we can apply it to various applications, in the original paper of cc2vec, authors demonstrate improvements in three type of applications

- Given a code change vector, What should be the commit message?

- Does the code change fix a bug?

- Does the code change introduce a bug? -> Allocate more testing /quality assurance resources.

We will discuss the overall architecture for predicting words in the commit messages, given a code change. However, in a practical use case, this might not be super useful as this would just give the probability of a word being present in a commit message and may not be grammatically correct. But, the good part, once we have an effective code change feature vector, we can use it in other applications, especially for just-in-time bug prediction tasks.

We know, a code change usually affects multiple files, we apply Hierarchical Neural Networks to each file to obtain file vectors and then we concatenate them to form a larger feature vector. We finally pass it through a feed-forward neural network along with vector representation of commit message and train the network to obtain the probability of words being in a commit message for the new code change.

Data Extraction

As we discussed earlier, each code change ranges across multiple files, with multiple hunks (consecutive lines of modified code) and each line consists of a sequence of tokens.

We need to extract all this information from the code change.

Using Extracted Data

In this section we discuss, how do we actually use extracted data from code change. Starting with a line added, we can have multiple tokens (t1, t2… tk), we feed it to Recurrent Neural Network(RNN) with attention layers to learn important tokens (Useful when certain tokens can make a code change more prone to having a bug) to get line vectors.

With multiple lines, we get multiple line vectors, these line vectors are further fed into another RNN with attention layers to get a Hunk Vector.

Again with multiple Hunks, we get multiple hunk vectors, and we again pass it to RNN with attention layers to get the final file vector which represents the code change effectively preserving the hierarchical relationship and other details.

Finally, the same process is repeated for Lines Removed to get file vector for removed lines of code.

Comparison Layers

Once we have file vectors Ea and Er for added lines and removed lines respectively, we apply a set of comparison functions E.g., element-wise subtraction, Euclidean Distance, etc. to get One vector that summarises all changes in a file.

More on these comparison vectors can be studied in the original paper.

Final Prediction

Once we have Vectors for Changes in multiple files, we simply concatenate them to get the final Feature Vector. This vector essentially represents one code change and can be used in multiple applications.

An interesting point being, all the three RNNs are jointly trained to learn the appropriate weights and biases for code changes.

Applications

One can think of many applications once we have a feature vector, authors in paper CC2Vec applied learned code representation essentially to the following three tasks and showed performance improvement over baseline models/approaches.

- Given Code Change, Predict commit message

- Predict: Is a code change a bug fix?

Relevancy, e.g., to decide which code changes to backport to older software versions. - Just-in-time defect prediction.

Relevancy: Useful to allocate quality assurance resources(e.g., code reviews) to code changes.

Conclusion

CC2Vec looks very promising approach, especially in training a model that can learn from past buggy commits and can warn the developer in repeating the same bug inducing change again.

We at Embold (Embold being an AI-Driven, Static code analyzer) offer a Recommendation Engine (RE), that works on similar principles and can train itself from existing Version Control Data and Issue Systems such as Github issues and Jira and provide recommendations to developers when they tend to commit a buggy change in Source Code.

Do check us out at, embold.io

We look forward to more applications of the CC2Vec approach in the future.

Comments are closed.